Machine learning is one of the most exciting and impactful fields of artificial intelligence, but it is also one of the most complex and challenging. To successfully implement machine learning projects, data scientists and engineers need to follow a systematic and rigorous workflow, from data collection to model deployment. This workflow is often referred to as a machine learning pipeline. But what exactly is a machine learning pipeline, how does it work, and why does it matter?

In this article, I will answer these questions and explain the benefits and best practices of using ML Pipelines. I will also introduce some of the tools and frameworks that can help you build and manage your own ML Pipelines.

So, whether you are a beginner or an expert in machine learning, this article will help you understand and appreciate the power and potential of ML Pipelines. Keep on reading till the end to learn more…

What is Machine Learning?

First things first, let us talk about what Machine Learning is.

It is a branch of artificial intelligence. It enables computers to learn from data. After which, they make the predictions or decisions without being explicitly programmed.

Machine learning has many applications in various domains, such as natural language processing, computer vision, recommender systems, self-driving cars, and more.

However, machine learning is not a simple process that can be done in one step. It involves multiple stages, such as data collection, data preprocessing, feature engineering, model selection, model training, model evaluation, model deployment, and model monitoring. Each stage has its own challenges and complexities and requires different tools and techniques.

To manage the complexity and ensure the quality of projects, it is essential to use a machine learning pipeline. A machine learning pipeline is a sequence of steps that automate the workflow of a machine learning project, from data ingestion to model deployment.

A machine learning pipeline can also be seen as a way of organizing and structuring the code and logic of a machine learning project.

What is a Machine Learning Pipeline?

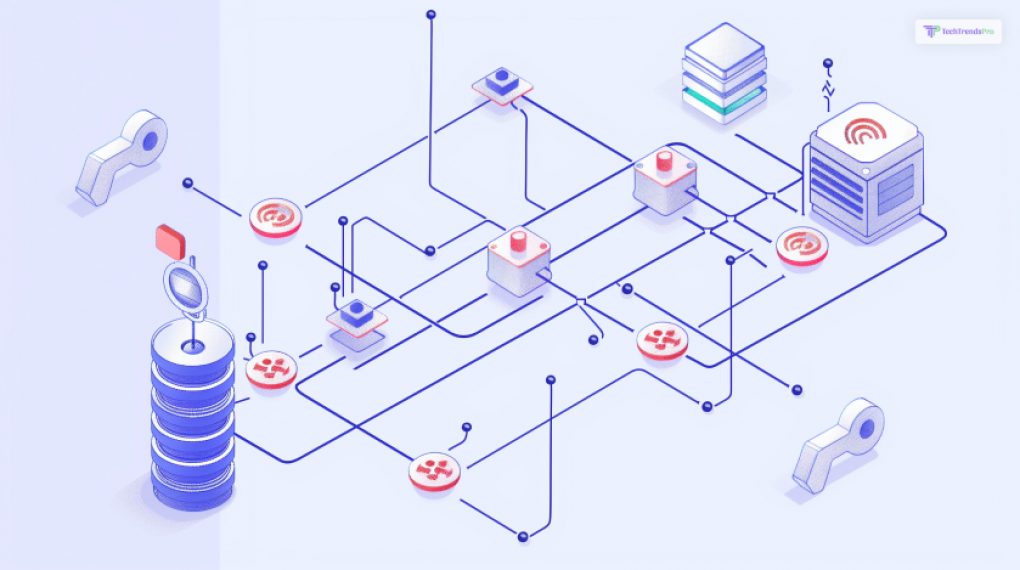

A machine learning pipeline is a way of organizing and automating the workflow of a machine learning project.

It consists of a series of steps that perform different tasks. Some of them are data preprocessing, feature engineering, model training, model evaluation, and model deployment. Each step can be seen as a module that can be developed, tested, and optimized independently.

Additionally, a machine learning pipeline can also be integrated with other components, such as data sources, cloud services, and web applications.

The main benefits of using a machine learning pipeline are:

- Firstly, it improves the efficiency and quality of the machine-learning process by saving time, reducing errors, and ensuring consistency and reproducibility.

- Secondly, it enables scalability and experimentation by allowing you to handle large, complex data sets and models and try different techniques and parameters.

- Lastly, it facilitates collaboration and deployment by making it easier for teams of data scientists and engineers to communicate and share their work and integrate their models into production systems.

How Does a Machine Learning Pipeline Work?

A machine-learning pipeline works by defining the inputs, outputs, and operations of each stage of the project. It then executes them in a sequential or parallel manner.

For example, a machine learning pipeline can take raw data as input, perform data preprocessing and feature engineering, train and evaluate a model, and deploy the model to a production environment.

Furthermore, this pipeline can be implemented using various tools and frameworks, such as Python, R, TensorFlow, PyTorch, Scikit-learn, Spark, AWS, Azure, and more. Depending on the tool or framework, a machine learning pipeline can be coded in a script, a notebook, a graphical user interface, or a configuration file.

This pipeline can also be integrated with other components. Some of them are data sources, data warehouses, data lakes, cloud services, web applications, and more. This allows the machine learning pipeline to access and store data, leverage computing resources, and deliver the results to the end users.

Why Are ML Pipelines Popular?

ML Pipelines are popular because they offer many benefits. Wondering what they might be? Well, I have you covered! Here are some of them that you need to take a look at:

1. Efficiency

Firstly, ML Pipelines can automate and streamline the machine learning workflow, saving time and effort for data scientists and engineers. ML Pipelines can also run in parallel or distributed mode, speeding up the execution and reducing the cost.

2. Reproducibility

Secondly, ML Pipelines can ensure that the same results are obtained when the same data and parameters are used, regardless of the environment or platform. It can also track and document the provenance and lineage of the data and the models, facilitating the debugging and auditing process.

3. Scalability

Thirdly, it can handle large and complex data sets and models by using appropriate tools and techniques, such as data partitioning, data sampling, feature selection, model compression, and more. ML Pipelines can also scale up or down according to the demand and availability of the resources.

4. Reliability

Fourthly, i can improve the quality and accuracy of the data and the models by applying data validation, data cleaning, data transformation, feature extraction, feature normalization, model validation, model testing, and more. ML Pipelines can also monitor and update the data and the models by using feedback loops, alerts, notifications, and more.

5. Collaboration

Lastly, ML Pipelines can enable collaboration and communication among the stakeholders of the project. Some of these include data scientists, data engineers, software engineers, business analysts, product managers, and more. Additionally, it can also facilitate the sharing and reuse of the data and the models by using standard formats, protocols, and interfaces.

Machine Learning Pipeline: Things to Keep in Mind!

ML Pipelines are a way of automating and streamlining the workflow of a machine learning project, from data ingestion to model deployment.

However, building a machine learning pipeline is not a trivial task, and it requires careful planning and execution.

Here are some of the things to keep in mind while making a machine-learning pipeline:

1. Define your objective and scope

Firstly, before you start building your pipeline, you should have a clear idea of what problem you are trying to solve, what data you need, what metrics you will use to measure success, and what resources and constraints you have.

2. Choose the right tools and frameworks

Secondly, depending on your use case and preferences, you may want to use different tools and frameworks to implement your pipeline. You should choose the ones that suit your needs and are compatible with each other.

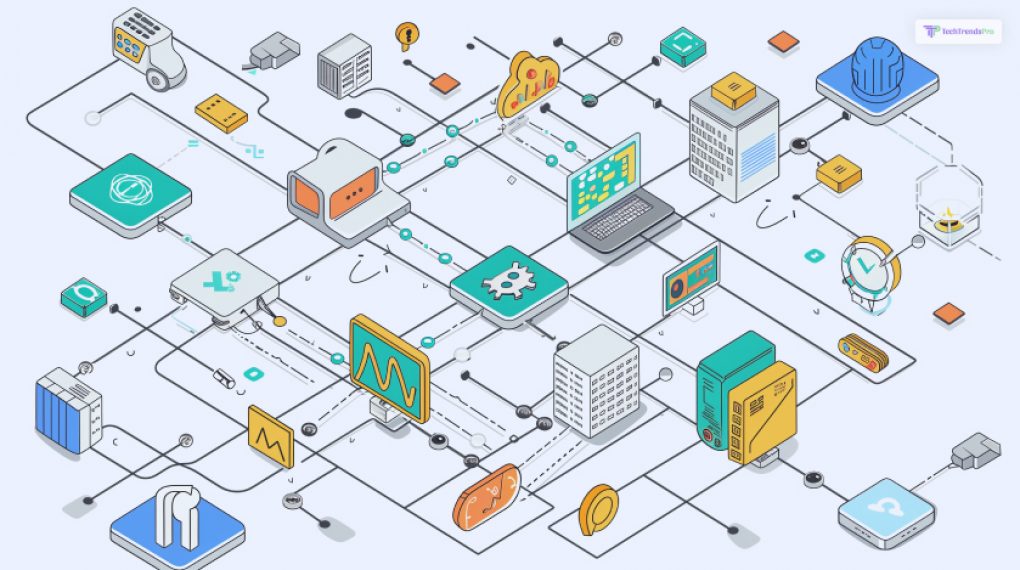

3. Design your pipeline architecture

You should design your pipeline architecture in a modular and scalable way. Besides, you can do so by breaking down your workflow into discrete and reusable steps.

These include data preprocessing, feature engineering, model training, model evaluation, and model deployment. You should also define the inputs, outputs, and dependencies of each step and how they will communicate and interact with each other.

4. Implement and test your pipeline components

You should implement and test each component of your pipeline separately by using appropriate tools and techniques. Some of them are data validation, data cleaning, data transformation, feature extraction, feature normalization, model validation, model testing, and more.

You should also document your code and logic. Ensure that you follow the best practices of software engineering, such as version control, code review, and debugging.

5. Deploy and monitor your pipeline

Lastly, you should deploy your pipeline to a production environment by using suitable tools and services, such as cloud platforms, web applications, and APIs.

You should also monitor your pipeline performance and quality by using feedback loops, alerts, notifications, and more. Also, you should update your pipeline as needed by adding new features, data, or models or by fixing bugs or errors.

Chronicles: Examples of Successful Companies that use Machine Learning Pipeline

Machine learning pipelines are workflows that automate the processes of data ingestion and preprocessing. It is also helpful in the training, evaluation, and deployment of machine learning models. Moreover, many companies use machine learning pipelines to improve their products, services, and operations.

Here are some examples:

- Amazon Web Services (AWS) offers a range of cloud-based services for machine learning. These include SageMaker, which simplifies the creation and management of machine learning pipelines. AWS also uses machine learning pipelines internally to power its e-commerce, voice assistant, and recommendation systems.

- Databricks is a data analytics platform that enables users to build, test, and deploy machine learning pipelines using Apache Spark. Additionally, Databricks also provides a unified data lakehouse architecture combining the best data warehouses and data lakes for machine learning.

- Dataiku is a data science platform that helps users design, build, and run machine learning pipelines. Dataiku supports various data sources, tools, and frameworks and allows users to collaborate and monitor their machine learning projects.

- Veritone is an AI platform that leverages machine learning pipelines to analyze and enrich various types of media, such as audio, video, and text. Veritone’s machine learning pipelines can perform transcription, face recognition, sentiment analysis, and object detection tasks.

- DataRobot is an automated machine learning platform that helps users build and deploy machine learning pipelines. DataRobot automates the tasks of data preparation, feature engineering, model selection, tuning, and deployment and provides explainability and governance features.

Industries Where Machine Learning Pipeline is Applicable

Machine learning pipelines are workflows that automate the processes of data ingestion, preprocessing, training, evaluation, and deployment of machine learning models.

They are essential for developing and deploying scalable, reliable, and efficient machine learning solutions for a wide range of applications.

Here are some industries where machine learning pipelines become applicable:

Healthcare

The first one on this list is Healthcare industry. Machine learning pipelines can help providers generate large volumes of data and make critical clinical decisions. For example, machine learning pipelines can be used to diagnose diseases, predict outcomes, recommend treatments, and monitor patients.

Transportation

Secondly, Machine learning pipelines can help transportation systems optimize routes, reduce traffic, improve safety, and enhance customer experience. For example, machine learning pipelines can be used to power self-driving cars, smart traffic lights, ride-sharing platforms, and public transit systems.

Finance

Machine learning pipelines can help financial institutions detect fraud, manage risk, automate trading, and provide personalized services. For example, machine learning pipelines can be used to analyze transactions, credit scores, market trends, and customer behavior.

Agriculture

Machine learning pipelines can help farmers increase productivity, reduce costs, and improve sustainability. For example, machine learning pipelines can be used to monitor crops, soil, weather, and pests, and provide optimal irrigation, fertilization, and harvesting strategies.

Cybersecurity

Machine learning pipelines can help cybersecurity systems prevent, detect, and respond to cyberattacks. For example, machine learning pipelines can be used to identify malicious activities, anomalies, and vulnerabilities and provide adaptive defense mechanisms.

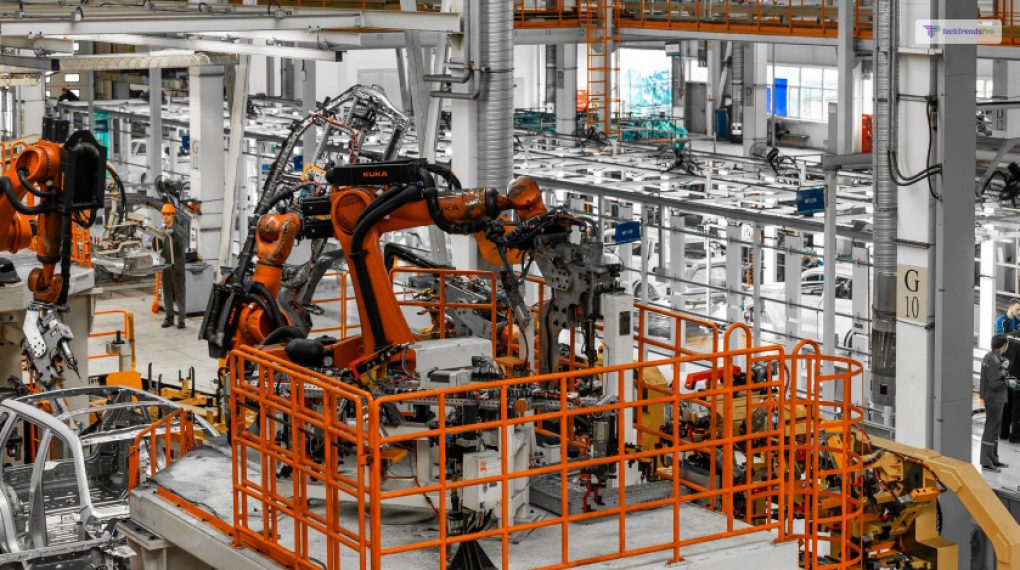

Manufacturing

Machine learning pipelines can help manufacturing systems improve quality, efficiency, and innovation. For example, machine learning pipelines can be used to optimize production processes, predict maintenance needs, detect defects, and design new products.

Marketing

Machine learning pipelines can help marketing systems increase customer loyalty, retention, and satisfaction. For example, machine learning pipelines can be used to segment customers, personalize offers, optimize campaigns, and measure performance.

Retail and customer service

Machine learning pipelines can help retail and customer service systems enhance customer experience, engagement, and satisfaction. For example, machine learning pipelines can be used to provide product recommendations, chatbots, voice assistants, and sentiment analysis.

Wrapping It Up!

In essence, a Machine learning pipeline is a powerful concept and tool that can help data scientists and engineers design, develop, and deploy machine learning projects in a more efficient, reproducible, scalable, reliable, and collaborative way.

The machine learning pipeline is not a one-size-fits-all solution but rather a flexible and customizable approach that can be adapted to the specific needs and goals of each machine learning project.

The machine learning pipeline is not only a technical challenge. Rather, it is also a cultural and organizational one that requires the alignment and coordination of the people, the processes, and the technologies involved in the machine learning project.

It is not a static and fixed entity. Rather, it is a dynamic and evolving entity that requires constant monitoring. It needs regular improvement to keep up with the changing data and business environment. In conclusion, this pipeline is not an end but rather a means to an end that aims to deliver value and impact to the customers and society through machine learning.

Read Also: